AI is going to improve your documentation... but not the way you expect

TL;DR: Forget AI replacing documentation. I think it's making documentation more critical than ever. Every AI interaction is like onboarding a new developer who knows nothing about your codebase. The better your docs, the better your AI performs. We're entering an era where documentation quality directly impacts development velocity, and your senior developers' time might be better spent writing context for AI, not code.

The brutal reality of AI-assisted development

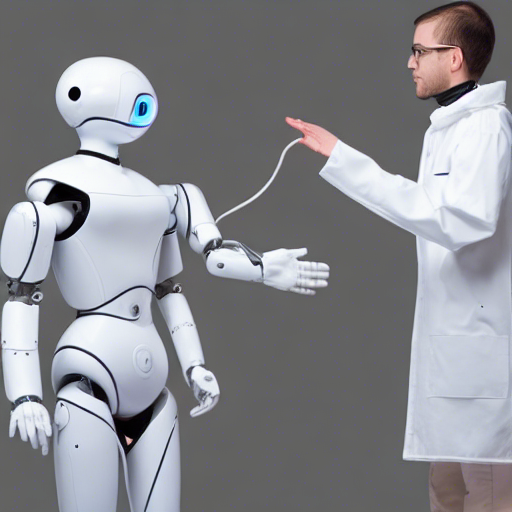

No, documentation has not become obsolete because "AI can just read the code." In fact, the opposite is true. Documentation has become the most high-leverage activity in your development workflow, and here's why: every time you prompt an AI agent, you're essentially onboarding a new junior developer who has zero context about your codebase, your architectural decisions, or your team's conventions.

Think about it. You pay for every token the AI processes. You wait for every context window to be filled. And most critically, the AI starts from scratch every single time. That brilliant refactoring it helped you with yesterday? Gone. The deep understanding of your module boundaries? Vanished. The nuanced grasp of why you chose that particular design pattern? Non-existent.

It's also better for the environment. AI inference is not cheap, if you can reduce the overhead of every request you make, its not only good for your token budget, its good for the planet! (I'm not kidding).

This is where the paradigm shift happens. Good documentation is no longer just helpful, it's the primary interface between your team's knowledge and your AI tools.

Your documentation is now code—treat it that way

Modern AI-assisted development relies on specific documentation patterns that directly impact AI performance. The emergence of files like .cursorrules and claude.md isn't just another config file trend—these are the instruction manuals that transform a generic AI into your team's specialized assistant.

Here's what actually works:

Context files are your new superpower. A well-crafted claude.md file in your project root should contain:

- Your project's architecture decisions and the why behind them

- Code style preferences that go beyond what a linter can catch

- Common patterns and anti-patterns specific to your codebase

- Module boundaries and interaction rules

- Performance considerations and optimization strategies

- It is not your

README.mdfocus on what the AI needs, and keep what the humans need in theREADME.md, minimize overlap.

Modular documentation beats monolithic files. Instead of one massive documentation file, you could structure your AI context like your code:

CLAUDE.md # Project wide context

.claude/

└── commands/

├── pr.md # How to create a PR

├── issue.md # Pushing a new issue

└── review.md # How you check/review code

src/

├── api/

│ └── claude.md # API-specific context

└── database/

└── claude.md # Database contextEvery "why" you document saves hundreds of future tokens or failed prompts. The AI can read your code and understand what it does. It cannot understand why you chose a particular approach, who the stakeholders are, or when certain patterns should be applied. This context is what separates useful AI suggestions from generic boilerplate.

How is also worth documenting, but not how the code works, HOW to get work done. That is the commands folder, descriptions on how your team/project does things and how the AI can use to use the tools your team depends on (more on this in the next section).

But also remember, document where your approach deviates from best practice/common wisdom, as tokens cost money/time/energy. So things which "go without saying" do not need to be said.

MCP changes everything about tool integration

Model Context Protocol (MCP) is huge. I think it should fundamentally change how you think about AI agents interacting with your development environment.

Before MCP, connecting an AI to your tools meant:

- Custom integrations for each AI provider

- Brittle API wrappers or custom scripts

- Limited access to real development workflows

- Or lots of copy paste from one tool into the AI's chat

With MCP, your AI can now:

- Create tickets directly in your issue tracker

- Get the latest documentation and best practice (Context7 rocks)

- Run your CI/CD pipelines locally and interpret results (or get the results from your remote system)

- Query your production metrics while debugging

- Take screenshots of the local (in progress) UI to compare with a design or expected behavior

The protocol defines three core primitives that matter for developers:

- Tools: Functions your AI can execute (like "create_github_issue" or "run_test_suite")

- Resources: Data streams it can access (like "current_sprint_tickets" or "error_logs")

- Prompts/Commands: Reusable templates for common or team specific operations

This means your documentation isn't just telling the AI about your system; it's giving it the ability to actually interact with and modify your system. The implications are profound: your documentation quality now directly impacts what your AI can do, not just what it knows.

The multiplication effect: documentation ROI in the AI era

Here's the brutal maths. Let's say you have a team of 10 developers. Pre-AI, you might onboard 1-3 new developers per year (or more if you are growing fast). With AI, you're effectively onboarding a new "developer" every single time someone opens their AI coding assistant.

If each developer uses AI assistance 20 times per day, that's 200 "onboardings" daily. 1,000 per week. 50,000 per year.

Now multiply that by the cost difference between a well-documented onboarding (AI immediately understands your patterns and conventions) versus a poor one (AI suggests generic solutions that don't fit your architecture). The leverage is staggering.

Bad documentation used to slow down new hires. Now it slows down every single AI-assisted action across your entire team.

But here's the thing most teams miss: writing documentation for AI is fundamentally different from writing for humans. Humans can infer context, ask clarifying questions, and learn incrementally. AI needs everything spelled out explicitly, every single time.

What actually works: practical documentation strategies for AI

After watching teams struggle and succeed with AI-assisted development, clear patterns emerge:

1. Document the "why," not the "what"

Your AI can read UserService.validateEmail() and understand it validates emails. What it can't understand is why you're using a custom validator instead of a library, why email validation happens at this layer instead of the frontend, or why you allow plus-addressing but not subdomain addressing.

2. Make your context explicit and specific

As well as the slightly redundant "follow best practices," write how your "house rules" differ.

- All API endpoints must return within 200ms

- Database queries must use the read replica for GET requests

- Never store PII in logs, even at debug level

- Use dependency injection for all services except logging

3. (optional) Provide examples of good and bad patterns

If you see it making the same mistakes a lot, remind it what good looks like.

# Good: Explicit error handling with context

try {

const user = await userService.find(id);

if (!user) throw new NotFoundError(`User ${id} not found`);

} catch (error) {

logger.error('User lookup failed', { userId: id, error });

throw error;

}

# Bad: Swallowing errors

const user = await userService.find(id).catch(() => null);

4. Version your AI context like code

Your claude.md and .cursorrules files should be in version control, reviewed in PRs, and updated when your architecture changes. It needs to be reviewed by your very best team members, as a bad change can be repeated 100's of times a day.

5. Have the AI help you keep things fresh

Stale AI context can be worse than no context; it actively misleads your assistant into suggesting outdated patterns. If you find yourself correcting a mistake, ask the AI what it learned from the interaction and if it should/could update the rule/context to avoid similar pitfalls in the future.

BONUS: Here is a Claude command you can use to help keep its context fresh: reflection.md

Happy prompting. Living in the future is AWESOME. As William Gibson says "The future is already here — it's just not very evenly distributed." ... yet.

(post created with help from Claude.ai, but based on my ideas, with human editing and such).